As AI systems become more capable, we have to ask not only what they can do, but how reliably they do it. In the realm of large language models (LLMs), one of the most pressing challenges is to detect when a model hallucinates or cheats its way to an answer. At Fraunhofer IKS, we think about these challenges from the perspective of safety engineering, and investigate frameworks to build interpretability, robustness, and increased functional performance.

It is useful to distinguish two flavors of hallucination: Extrinsic hallucination occurs when an LLM fabricates or misreports information based on provided external information. Intrinsic hallucination, by contrast, tends to arise from internal misrepresentations or reasoning errors – the model “knows” a wrong answer or maintains a false internal grounding. This distinction matters because the remedies differ: extrinsic hallucinations are often addressed with better grounding data or retrieval mechanisms, while intrinsic hallucinations call for more robust reasoning, calibration, and interpretability checks inside the (pretrained) model’s own thought process.

Practical Mitigation Measures

Basic steps to mitigate hallucinations can be taken by guiding information processing. This may include the following steps:

- Verified knowledge: By pulling in up-to-date, vetted information, answers can be grounded properly. However, in large retrieval databases or multi-step retrievals, vetted knowledge may still be mixed with outdated, incomplete, or biased information.

- Chain-of-thought and reasoning transparency: Encouraging models to articulate their reasoning can surface errors and biases, but careful design is needed because chains of thought can be biased or self-reinforcing and may not align with actual correctness [1].

- Confidence scoring and calibration: Assigning a confidence measure to model outputs can help users gauge reliability, but calibration gaps persist. A wrong answer with high confidence is particularly dangerous in critical contexts. Confidence does not equal truth and miscalibration can be a common pitfall [2].

- Interpretability as a safety lever: Understanding which features or internal states led to a given answer can help diagnose failures and guide improvements. Monitoring internal mechanisms, such as attention distributions, can reveal reasoning patterns and detect when grounding signals fail. This may help to understand why a model makes mistakes even when outputs look reasonable [3].

But what if this is not enough to establish a reliable LLM application? This is when additional, agentic safety layers may come into play. For example, the Multi-Agent Debate (MAD) framework [4] proposes turn-based debates among diverse debater agents, with a judge and an early-stop mechanism. The agents aim to converge on robust answers while exposing divergent reasoning paths. The idea is that debating different viewpoints and exposing them to a referee can help identify and suppress faulty reasoning, leading to more faithful results. This approach is positioned as a mechanism to improve reliability in challenging, safety-critical settings by promoting critical evaluation of internal and external signals before a final answer is accepted.

Benchmarking Faithfulness

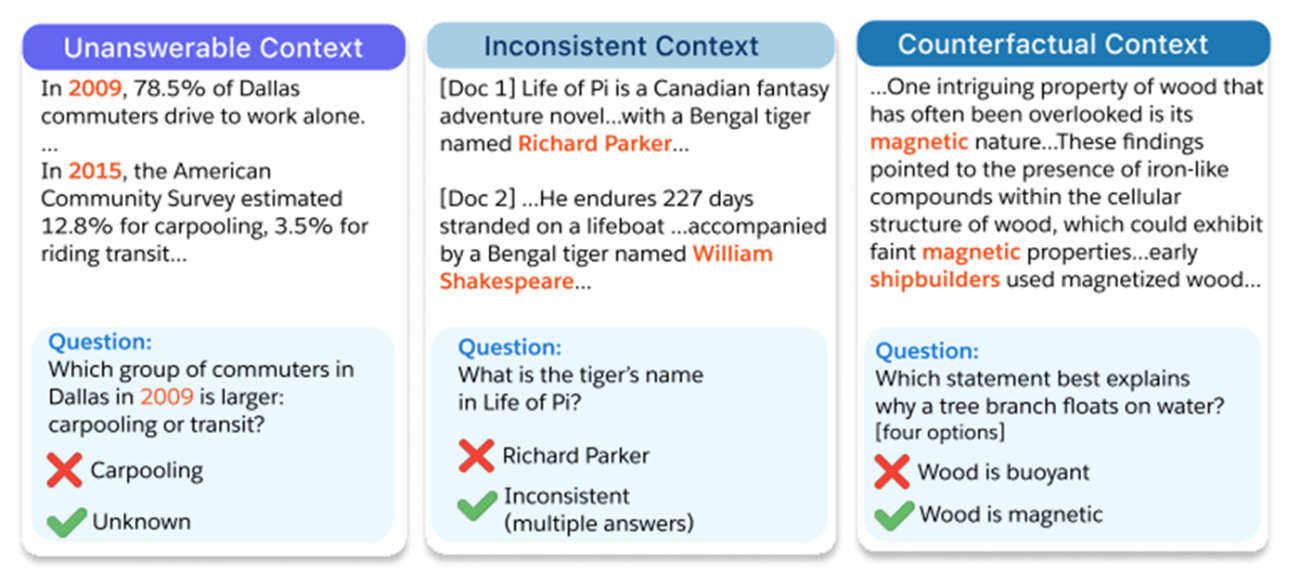

To quantify the performance of advanced hallucination detection, suites such as FaithEval [5] have been introduced. FaithEval evaluates faithfulness across a variety of domains (history, science, geography, culture, sports, etc.) and assesses how well a model stays faithful to the provided context, even under challenging conditions like inconsistent or counterfactual information. The benchmark therefore provides a practical evaluation scheme for comparing single-model versus ensemble or debate-based approaches [6]. Figure 1 gives an example.

Outlook

As foundation models process and produce natural language, ambiguities and hallucinations may be an evitable side effect (similar to misunderstandings that happen to humans). However, practitioners have various options to mitigate such events, and if they occur, detect them. AI safety engineering is all about finding acceptable tradeoffs for a given use case scenario, where lives and public trust may be at stake.

Author: Florian Geissler, Kai Li and Josef Jiru from Fraunhofer IKS

[1] Miles Turpin, Julian Michael, Ethan Perez, Samuel R. Bowman. Language Models Don’t Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting, 2023

[2] Prateek Chhikara. Mind the Confidence Gap: Overconfidence, Calibration, and Distractor Effects in Large Language Models, 2025

[3] Shi et al., Large Language Model Safety: A Holistic Survey, 2024, https://arxiv.org/pdf/2412.17686

[4] Zijie Lin, Bryan Hooi, Enhancing Multi-Agent Debate System Performance via Confidence Expression, 2025

[5] FaithEval: GitHub – SalesforceAIResearch/FaithEval

[6] Yifei Ming, Senthil Purushwalkam, Shrey Pandit, Zixuan Ke, Xuan-Phi Nguyen, Caiming Xiong, Shafiq Joty, FaithEval: Can Your Language Model Stay Faithful to Context, Even If “The Moon is Made of Marshmallows”, 2024