It is common practice in large-scale enterprises to organize important factual data as RDF knowledge graphs (KGs, equivalently knowledge bases or KBs). Storing data as RDF KGs has the advantage of a flexible subject-predicate-object (SPO) format, which simplifies the work of moderators by eliminating the need for complex schemas as in equivalent databases. KGs are usually queried via SPARQL, a data retrieval and manipulation language tailored to work with graph-based data. With the advent of large language models (LLMs), LLMs are now used to generate SPARQL queries from the user’s natural language (NL) questions, replacing previous sophisticated in-house systems.

Like other knowledge repositories, KGs are also conveniently searched and explored via conversational question answering (ConvQA) systems. However, ConvQA comes with a major challenge: vital parts of the question are left implicit by the user, as in dialogue with another human. In preliminary experiments, we found that even with the most capable LLMs like GPT-4o, translating a user’s conversational questions to SPARQL is a bottleneck, even with representative in-context examples. This problem is exacerbated for more complex intents with conditions, comparisons and aggregations. Moreover, a KG often contains information that can satisfy more abstract intents: this cannot be harnessed via SPARQL.

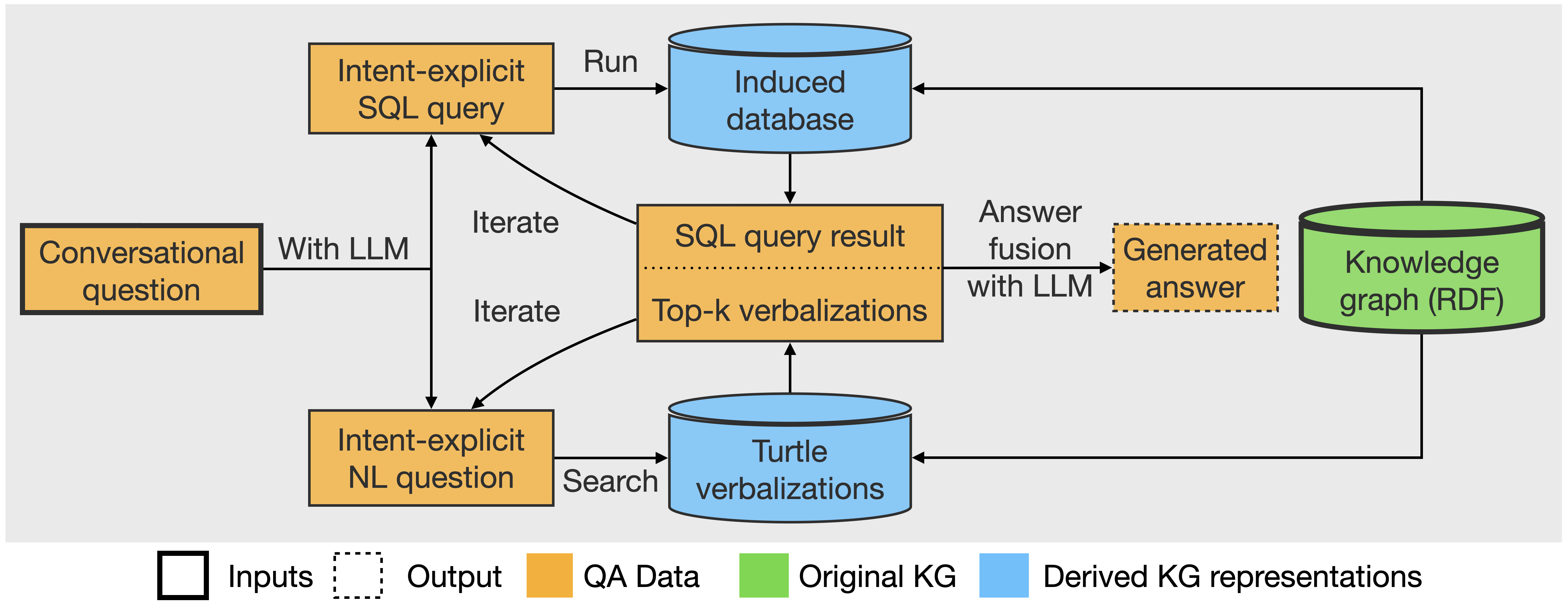

We at the NLP team at Fraunhofer IIS, overcome these limitations and present a novel system RAGONITE, that operates with two branches: SQL over induced databases satisfy crisp information needs – including mathematical operations, while less defined intents are handled by text retrieval over KG verbalizations. Notably, we allow repeated retrievals if a single round fails to fetch satisfactory information from the backend KG. Finally, the results of these branches are merged by a generator LLM to formulate the answer that is shown to the user who posed the conversational question. An overview is in the Figure below.

While Text2SPARQL has been investigated in the past, we adopt a novel alternative of exploiting Text2SQL, where LLMs do much better, over a DB that is programmatically induced from the KG. By converting a KG to its verbalized form that is indexed as a vector DB, we show that the information in knowledge graphs can be useful for more abstract intents as well. For more information, the reader is encouraged to visit our GitHub repository at https://github.com/Fraunhofer-IIS/RAGonite .

Author: Rishiraj Saha Roy, Chris Hinze, Joel Schlotthauer, Farzad Naderi, Viktor Hangya, Andreas Foltyn, Luzian Hahn, and Fabian Küch from Fraunhofer IIS