Speech synthesis, also known as Text-to-Speech (TTS), is a technology that converts written text into audible speech. Early attempts at synthesizing human-like speech signals by means of electronic circuits date back to the 1930ies. Over the years, computers and signal processing algorithms were introduced, yielding ever increasing intelligibility and naturalness of the synthetic speech. A real leap forward was made in recent years through the introduction of artificial intelligence (AI) to the TTS research. When done right, an unprecedented level of naturalness and expressiveness of artificially generated voices can be achieved through the use of AI-based TTS. Therefore, this technology is nowadays widely used in various areas of human-machine interaction, media production and communication. As a result, existing boundaries in the industrial application and social significance of speech synthesis are being re-explored and redefined. This is evident in the wide range of possible applications, but also in the potential for misuse.

Within the DSAI project, the functionalities and performance of the Fraunhofer TTS technology have been significantly advanced. The most important achievement is the development of our multi-lingual TTS model, which has the ability to synthesize speech in multiple languages. As of now, the supported languages comprise: two variants of German (Standard, Austrian), two variants of English (British, American) and French. What sets our development apart from other research groups is the high-quality, polyglot speakers. That means, any of the 18 voices available in our TTS can speak any of the aforementioned languages / dialects even if only the native language of the respective voice talents was used for training. Even more importantly, the synthetic voices can seamlessly switch between languages within one sentence. This so-called code switching ability is important for the correct pronunciation of foreign words and common names.

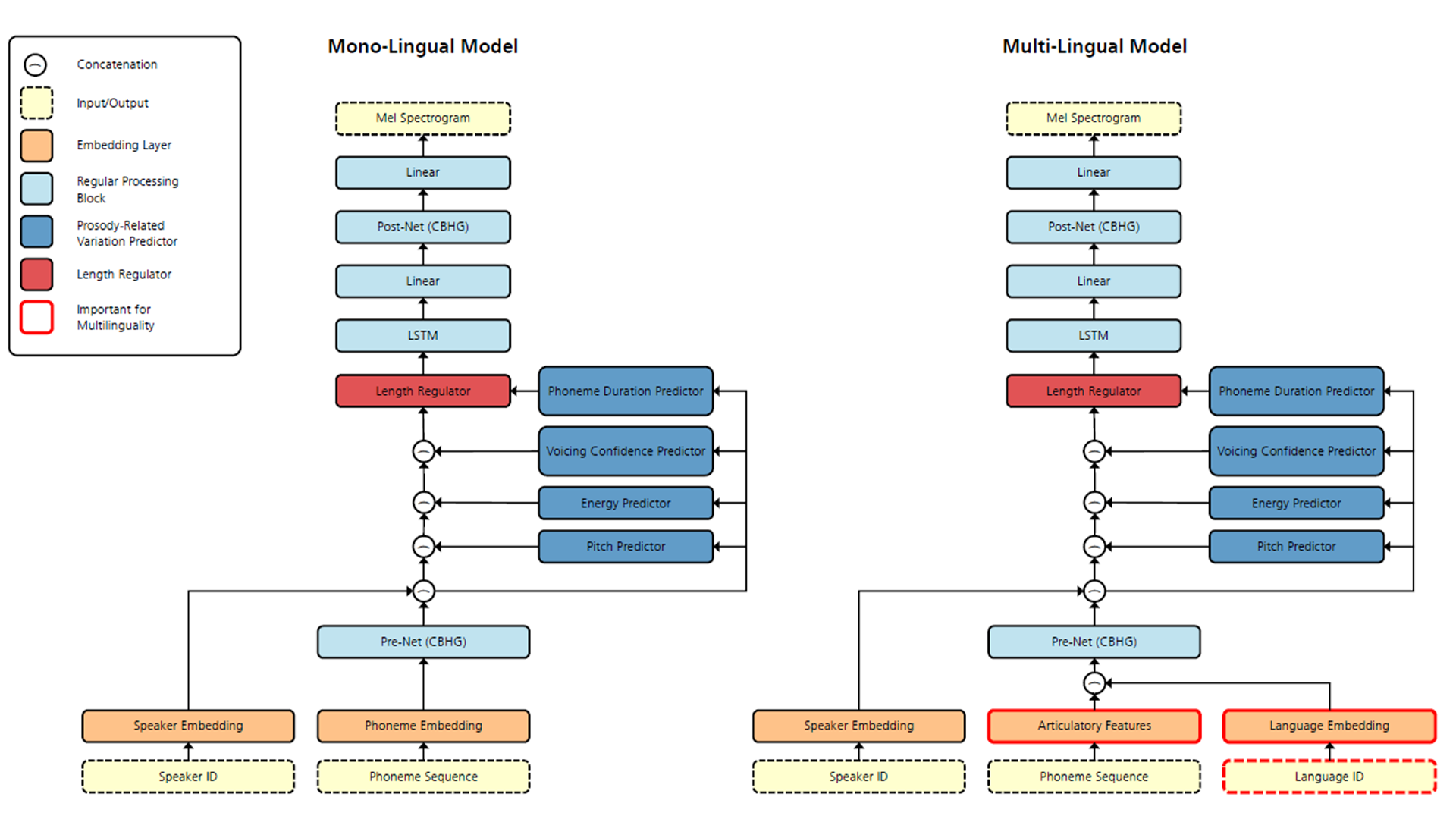

On the research side, the multi-linguality is achieved using three main ingredients. Firstly, we compiled and curated high-quality training datasets in different languages, some of which were comissioned through funds from the DSAI project. Secondly, we implemented so-called articulatory features, which help to steer the TTS model towards learning a linguistically meaningful internal representation of the input text during training. And lastly, we employ language embeddings, which facilitate to learn speaker-independent properties of the respective languages. A coarse overview of our model architecture is given in Figure 1. With this approach, we are able to disentangle (i.e., independently control) the spoken language from the speaking style (prosody) and voice timbre of the TTS voices. Through our experiments within the DSAI project, we found a good setting for our multi-lingual model regarding embedding sizes and other hyperparameters. Subjective listening tests largely confirmed that our multi-lingual model performs better than its mono-lingual predecessor.

Author: Christian Dittmar